Sind Sie sich zu 98 % sicher, dass Ihre Daten korrekt sind?

Bei der Arbeit mit maschinellem Lernen können neuronale Netze bei der Beurteilung ihrer eigenen Genauigkeit anmaßend sein, aber was steckt wirklich hinter den KI-Konfidenzwerten? Es gibt einige Parallelen zwischen dem Netzwerkvertrauen und der Realität, aber wie können Sie darauf vertrauen, dass Ihr Netzwerk korrekt ist?

Maschinelles Lernen ist eine großartige Möglichkeit, große Mengen visueller Daten aus Bildern, Filmen oder Echtzeit-Videomaterial zu analysieren, um Personen oder bedeutungsvolle Objekte in einer Szene zu identifizieren. Diese Daten können dann für die Verwendung in intelligenten Anwendungen manipuliert werden, wie z. B. Gesichtserkennung für Sicherheitssysteme, menschliche Aufmerksamkeit in Fahrerüberwachungssystemenoder Identifizierung defekter Objekte auf einem Fließband in einer Fabrik, um nur einige Beispiele zu nennen. Bei der Visualisierung der Ergebnisse von Klassifizierern oder Objektdetektoren werden neben dem erkannten Objekt oft Prozentzahlen angezeigt, die als "Vertrauenswert" bezeichnet werden. Unten sehen Sie ein Beispiel für einen Objektdetektor in einer städtischen Umgebung, bei dem der Vertrauenswert über jedem erkannten Objekt angezeigt wird:

Aber was bedeutet eigentlich ein Konfidenzwert und wofür wird er verwendet? Der allgemeine Konsens beschreibt einen Konfidenzwert als eine Zahl zwischen 0 und 1, die die Wahrscheinlichkeit darstellt, dass die Ausgabe eines Modells für maschinelles Lernen korrekt ist und die Anfrage eines Nutzers erfüllt.

Auf den ersten Blick klingt das hervorragend! Es scheint, als ob das Netz die Wahrscheinlichkeit, dass es korrekt ist, irgendwie selbst bestimmen kann. Bei näherer Betrachtung ist dies jedoch eindeutig nicht der Fall, wie weiter unten gezeigt wird.

Ein Netz kann die Korrektheit seiner eigenen Ausgabe nicht beurteilen

Wenn ein Netz die Korrektheit seiner eigenen Ausgabe beurteilen könnte, würde dies bedeuten, dass es die Antwort bereits kennt. Die Antwort würde immer entweder 0 % oder 100 % lauten, niemals irgendetwas dazwischen. Wenn wir uns jedoch einen Ausschnitt unseres Stadtbildes genauer ansehen, sehen wir Folgendes:

In diesem Fall hat das Netz der Person, die einen Motorroller fährt, einen Vertrauenswert von 81,2 % gegeben.

Für einen Menschen ist es klar, dass das Bild eine Person auf einem Roller zeigt, aber aus irgendeinem Grund ist das Netzwerk nur zu 81,2 % sicher, dass dies der Fall ist. Aber was bedeutet 81,2 % eigentlich? Bedeutet es, dass das Netzwerk davon ausgeht, dass es in 18,8 % der Fälle von Menschen auf Rollern falsch liegt? Da es sich in der Tat um ein Bild einer Person auf einem Roller handelt, sollte die Sicherheit 100% betragen. Bedeutet das, dass wir nur 81,2 % eines Menschen sehen? Was ist hier los?

Die kurze Antwort lautet, dass Vertrauen keine Wahrscheinlichkeit ist.

Stattdessen kann die Konfidenzausgabe eines Netzes als relative Konfidenz und nicht als absolute Metrik betrachtet werden. Mit anderen Worten: Das Netz fühlt sich bei einer 98%igen Erkennung sicherer als bei einer 81%igen Erkennung, aber es sollte keine absolute Interpretation von 98% oder 81% geben. Dies ist auch der Grund, warum ein Konfidenzwert oft mit einem Schwellenwert versehen ist, der die Grenze zwischen wahr und falsch darstellt.

Eine Möglichkeit, die relative statt der absoluten Interpretation zu verdeutlichen, ist das Verständnis, dass wir das absolute Vertrauen leicht skalieren können, wie unten gezeigt.

Den Daten einen Vertrauensvorschuss geben

Die Konfidenzausgangsmetrik basiert oft auf einer großen Anzahl von Berechnungen. Es gibt jedoch eine triviale Methode, mit der ein Ingenieur das Konfidenzniveau nach Belieben erhöhen (oder verringern) kann.

In unserem Stadtbild mit dem Motorroller war das Netz nur zu 81,2 % sicher. Dies wird durch den Wert 0,812 repräsentiert, aber wir können das Netzwerk leicht so modifizieren, dass es einfach die Quadratwurzel der Konfidenz berechnet, bevor es die Antwort ausgibt, wodurch die Netzwerkberechnung um einen Schritt verlängert wird. Auf diese Weise erhalten wir anstelle von 0,81 einen Wert von √0,812 = 0,9, also 90 %. Wir haben soeben den Konfidenzwert des Netzes erhöht! Wenn wir dieselbe Quadratwurzel-Operation noch einmal durchführen, erhalten wir 95 % Konfidenz, und ein weiteres Mal 97 % Konfidenz. Nicht schlecht für ein paar triviale mathematische Operationen. Auch hier kommen wir zu dem Schluss, dass es keine gültige Interpretation der absoluten Konfidenzwerte gibt.

Es ist klar, dass wir den absoluten Konfidenzwert leicht ändern können, um mehr oder weniger zu erhalten. Dies verdeutlicht, dass absolute Konfidenzwerte in gewisser Weise bedeutungslos sind, da sie so manipuliert werden können, dass sie einen beliebigen Wert anzeigen.

Hand-zu-Hand-Kampf mit einem Grizzlybären

In einer kürzlich durchgeführten Umfrage, YouGov Amerikaner und Briten befragt, wie sicher sie sind, dass sie verschiedene Tiere im unbewaffneten Kampf besiegen könnten. Was hat das mit dem Vertrauen in neuronale Netze zu tun, fragen Sie sich? Das wollen wir herausfinden.

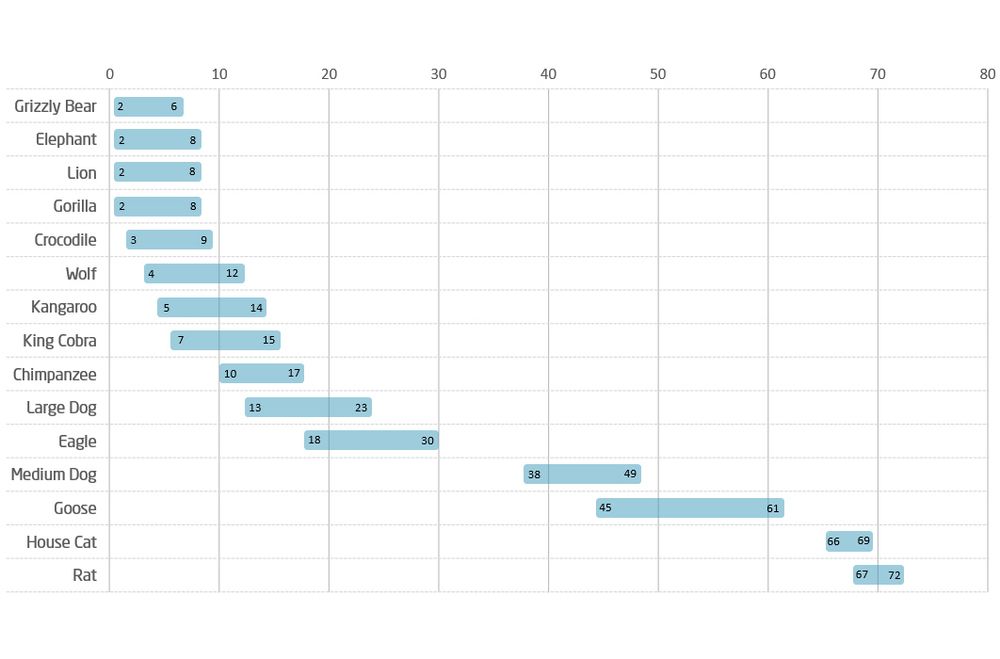

Die Ergebnisse, wie Menschen aus den USA und Großbritannien ihre Chancen im Nahkampf mit verschiedenen Tieren einschätzen (oder selbst einschätzen), sind in der folgenden Abbildung zu sehen.

QuelleDie Amerikaner sind zuversichtlicher als die Briten, dass sie jedes Tier in einem Kampf besiegen könnten, YouGov UK

Es gibt mehrere Analogien zwischen der Selbsteinschätzung von Menschen, die mit ihren Händen gegen Grizzlybären kämpfen, und dem Vertrauensmaß neuronaler Netze.

Erstens ist es eine Selbsteinschätzung. Genau wie bei der Vertrauensbewertung eines Netzwerks werden die Personen gebeten, das Vertrauen in eine Situation einzuschätzen, die sie wahrscheinlich noch nie erlebt haben; das Netzwerk hat vielleicht noch nie eine Person auf einem Motorroller gesehen, und die meisten amerikanischen Männer haben noch nie mit einem Grizzlybären gekämpft.

Zweitens: Wie sollen wir die Zahlen interpretieren? Genau wie die Schwierigkeit, zu wissen, was man mit einer 81%igen Sicherheit bei der Einschätzung, dass eine Person auf einem Roller eine Person ist, anfangen soll, was bedeutet es wirklich, wenn 6% der männlichen US-Bürger glauben, sie könnten einen Grizzlybären im Nahkampf besiegen? Bedeutet es, dass, wenn wir 100 Amerikaner bitten, gegen einen Grizzlybären zu kämpfen, 94 % davonlaufen und 6 % kämpfen werden - und gewinnen? Auch hier ist die Interpretation des menschlichen Selbstvertrauens für bestimmte Fälle unklar.

Und schließlich enthält die relative Selbsteinschätzung - wie das Vertrauensmaß eines neuronalen Netzes - auch Informationen. So könnte man z. B. zu dem Schluss kommen, dass es für die Amerikaner leichter ist, einen Kampf gegen eine Gans (61 %) zu gewinnen als gegen einen Löwen (8 %). Auch wenn das absolute selbst eingeschätzte Vertrauen nicht viel aussagt, sollten die Menschen, wenn sie die Wahl haben, lieber gegen eine Gans als gegen einen Löwen kämpfen.

Wie selbstbewusst sind Sie?

Letzten Endes ist ein Konfidenzwert für sich genommen eine bedeutungslose Zahl, insbesondere in Bezug auf absolute Werte. Relative Konfidenzwerte können einen Hinweis darauf geben, wo das Netz seine Fähigkeiten besser einschätzt, aber ohne eine gründliche Analyse der Leistung des neuronalen Netzes anhand von realen Daten können selbst relative Konfidenzwerte sehr irreführend sein und leicht dazu führen, dass Sie Ihr Vertrauen falsch setzen.

Wie kann man also den Zahlen die richtige Bedeutung geben? Die einzige Möglichkeit, eine definitive Genauigkeit der Ergebnisse neuronaler Netze zu erreichen, besteht darin, große Mengen strukturierter Eingaben bereitzustellen, die genau und konsistent beschriftet sind. Je besser Sie Ihre Daten kennen, desto besser können Sie Ihren Konfidenzwert verstehen. Bei Neonode erreichen wir dies durch computergenerierten visuellen Input, der als synthetische Daten bezeichnet wird. Dies ist nicht nur ein genauerer Weg, um neuronale Netze zu trainieren, sondern auch viel schneller als das Sammeln von realen Daten, und es kann verwendet werden, um die Robustheit und Verallgemeinerung von maschinellen Lernmodellen zu testen, da synthetische Daten nicht den gleichen Verzerrungen und Störfaktoren unterliegen wie reale Daten, die aus echten Fotos oder Filmen gewonnen werden.

Vertrauen in Neonode

Das Vertrauen ist nur einer von vielen Parametern, die die Gültigkeit der Ergebnisse eines Systems bestimmen. Bei Neonode werden alle unsere Netzwerke von uns produziert und mit umfangreichen synthetischen Daten trainiert, die ebenfalls von uns produziert werden. Da wir die volle Kontrolle über den gesamten Prozess haben, haben wir eine innovative Methode entwickelt, die Ähnlichkeitswerte für ein eingegebenes Bild generiert, wodurch wir eine weitaus höhere Genauigkeit erzielen, als wenn wir uns nur auf Vertrauenswerte verlassen.

Das bedeutet, dass, wenn unsere Netzwerke ein Bild nicht mehr richtig bewerten können, ein allgemeineres und robusteres, weniger genaues Netzwerk oder ein Prozess einspringt und dem Gesamtsystem signalisiert, dass die Signalintegrität gesunken ist.

Neonode übernimmt die schwere Arbeit, wenn es darum geht, unsere Netzwerke vollständig zu verstehen und die Ergebnisse des neuronalen Netzes zu interpretieren. Wir wissen genau, wann unsere Netzwerke gültige Ergebnisse liefern und, was noch wichtiger ist, wann nicht. Auf diese Weise können sich unsere Kunden auf die Nutzung unserer Informationen und Ergebnisse konzentrieren, um die Erfahrungen der Endbenutzer zu definieren, anstatt zu versuchen, die Gültigkeit der Ergebnisse zu interpretieren.

Zuversicht ohne Kontext ist bedeutungslos.